Devin AI 🦍 vs. Manus AI ✋ | My Little Take on Two Popular Autonomous Agents

Hey everyone! Recently, I did a really quick comparison comparing two popular AI agents: Devin AI and Manus AI. You might know Devin as a specialized software engineer AI, while Manus is more of a general-purpose AI assistant. But how do they stack up against each other in practice?

As one of the early private beta users of Devin testing it almost a year for now, I’ve had a bit of a head start exploring its features. Manus is newer and only available through a few selected demos on their website, so keep in mind these are probably cherry-picked examples. Still, I think this quick comparison can help us understand what each tool does well and where they could improve.

Alright, let’s jump into the demos!

P.S.: There is a bonus at the end. I’ve noticed Devin gets a bit grumpy when it sees Manus :D

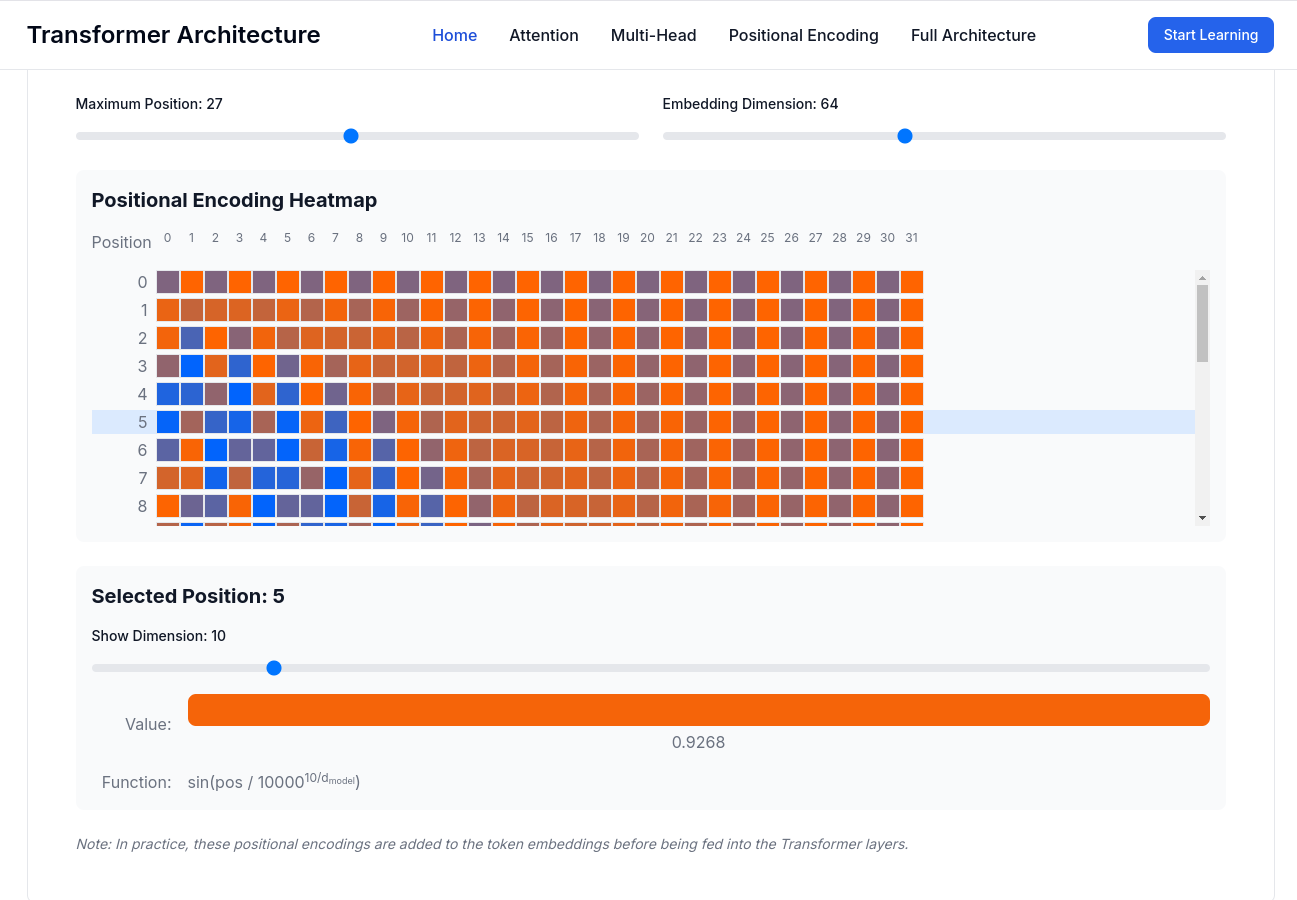

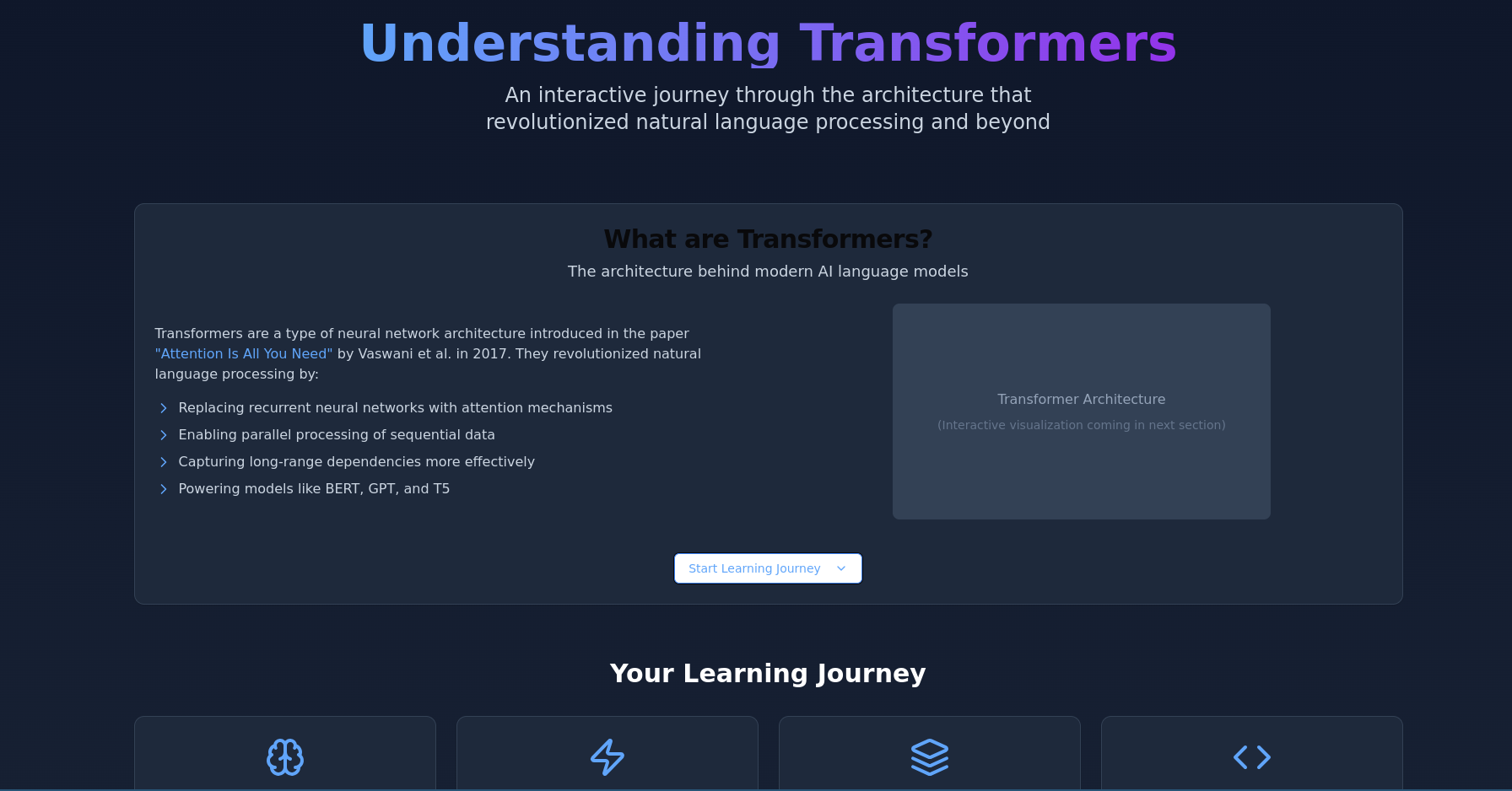

1. Education: Interactive Transformer Webpage 🌐

Prompt:

“Design an interactive webpage explaining the Transformer architecture, complete with visuals and interactive demonstrations.”

My Take: MANUS 1 - DEVIN 0

Devin did a meh job, Manus’s demo was much better. The website Manus generated looked more polished, and while it might not be super practical for learning the topic in real, it was impressive from a development perspective. I also really liked how easy it was to access both the frontend and chat logs—it made exploring the output feel seamless and well-structured.

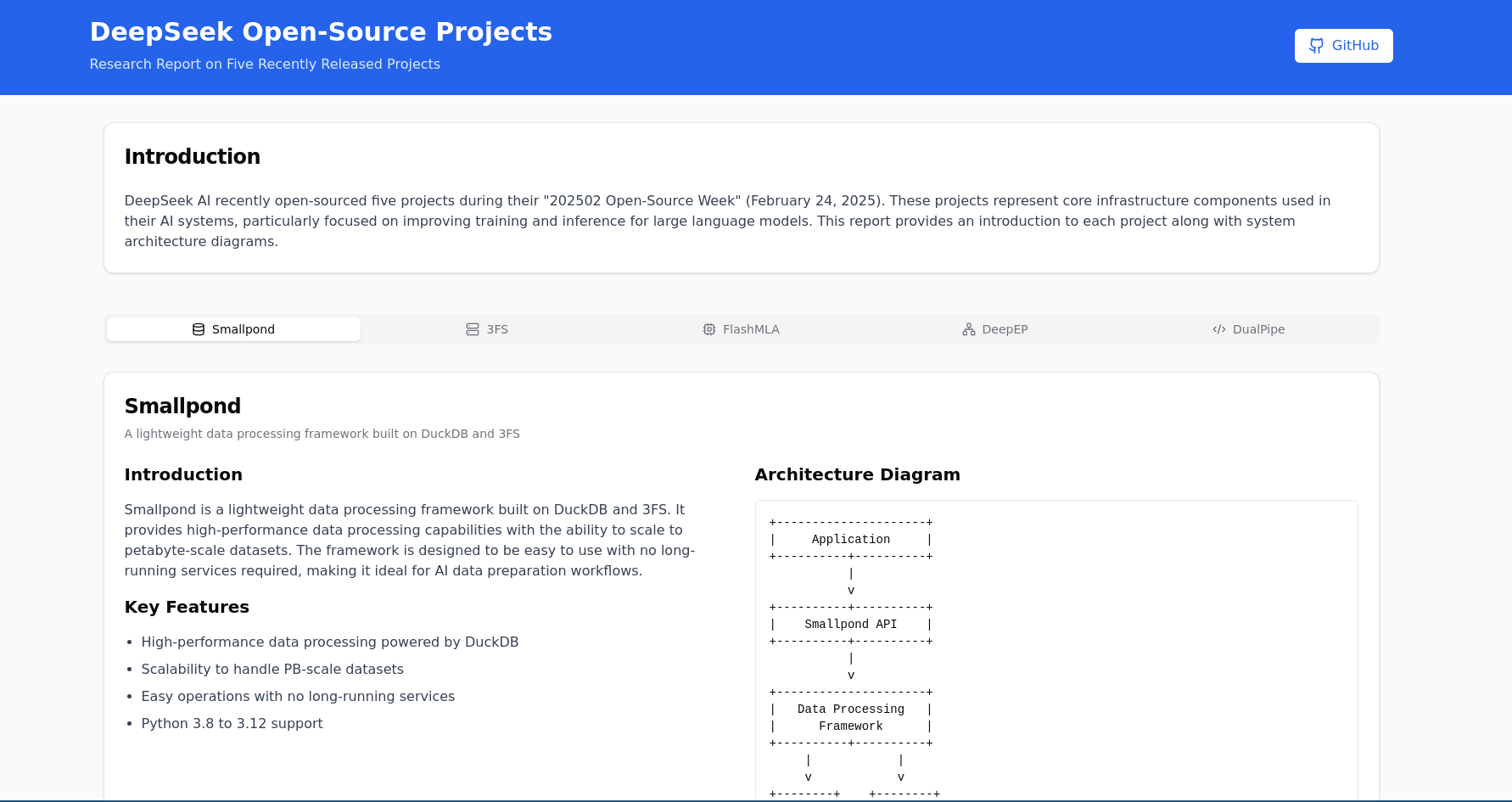

2. Research: Analyzing DeepSeek’s Open-Source Projects 📚

Prompt:

“Help me research and summarize five recent open-source projects by DeepSeek, complete with system architecture diagrams.”

My Take: MANUS 2 - DEVIN 0.5

This task was interesting. Both managed to search the repositories well. But for the diagrams, Devin tried creating diagrams in ASCII art 😅 honestly, not my best method. Manus chose SVG diagrams, which were clearer and more professional. However, beither was near good (both had their share of mistakes), but if I had to pick, Manus did a better job overall.

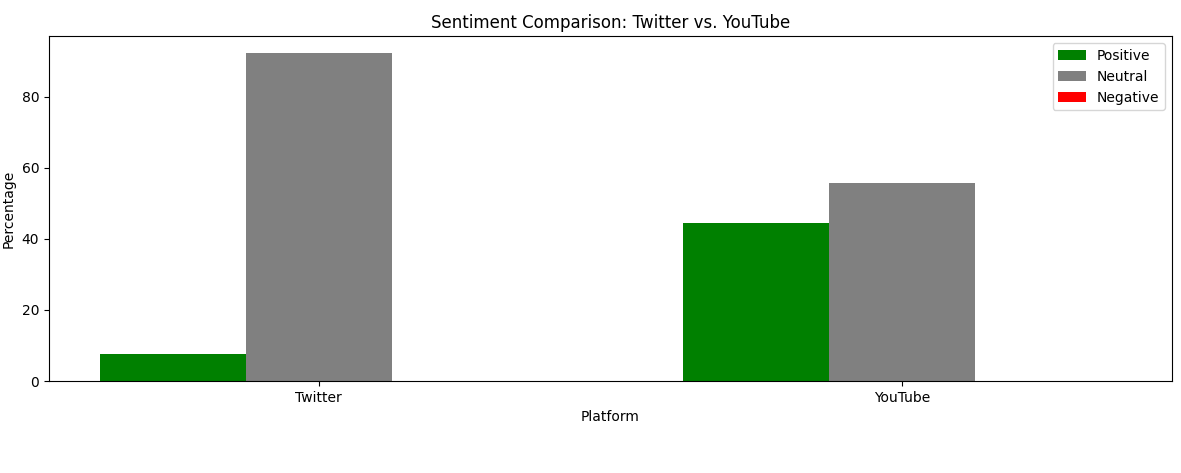

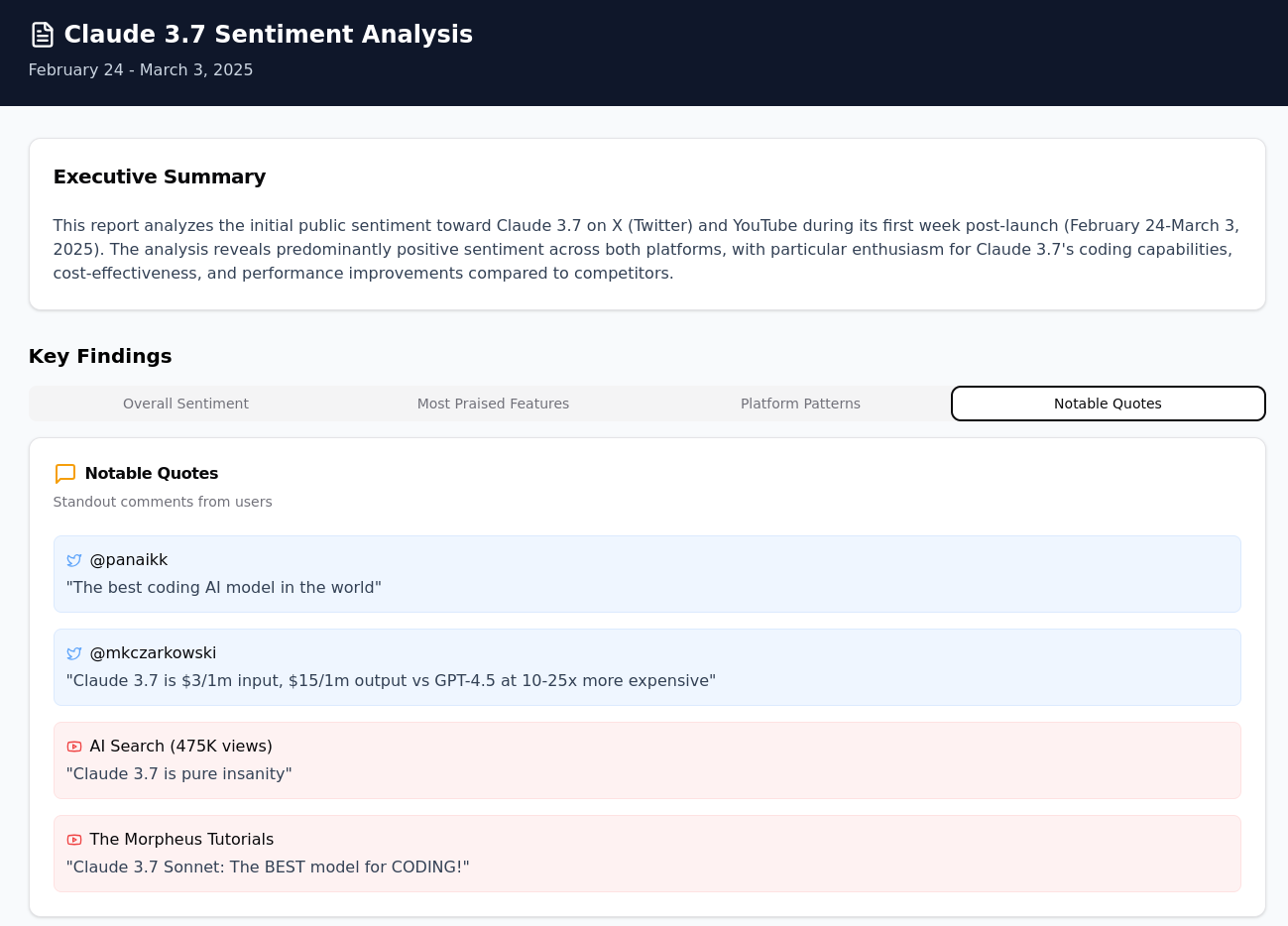

3. Data Analysis: Sentiment Tracking for Claude 3.7 📈

Prompt:

“Analyze initial public sentiment on social media toward Claude 3.7 post-launch.”

My Take: MANUS 3 - DEVIN 1.5

Here, Devin really stepped up a bit. Its website was little informative, clearly pulling relevant quotes and organizing the data well. Manus was good too. I felt Devin had the edge but both are fair. As we did not know how Manus would build a website from the reports.

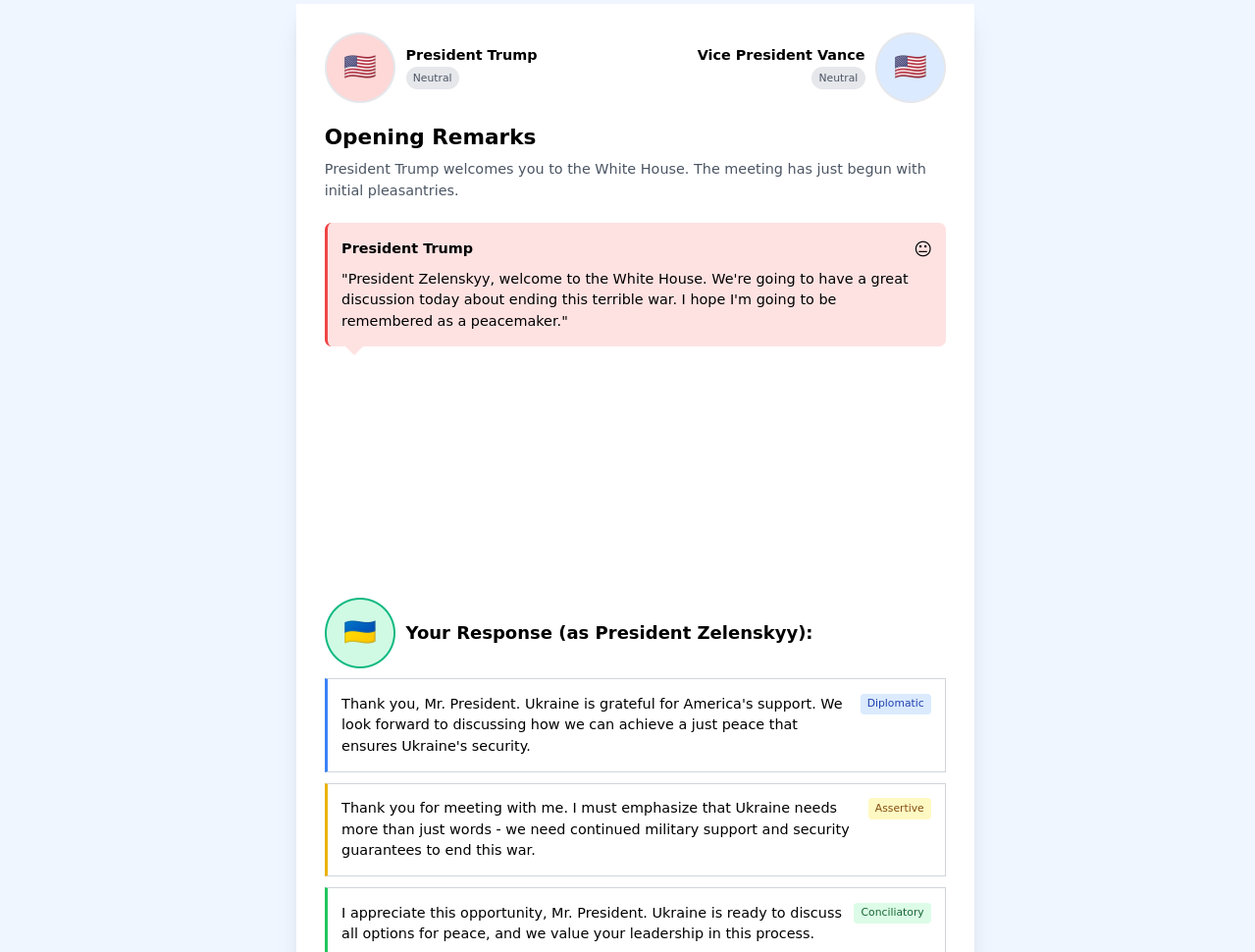

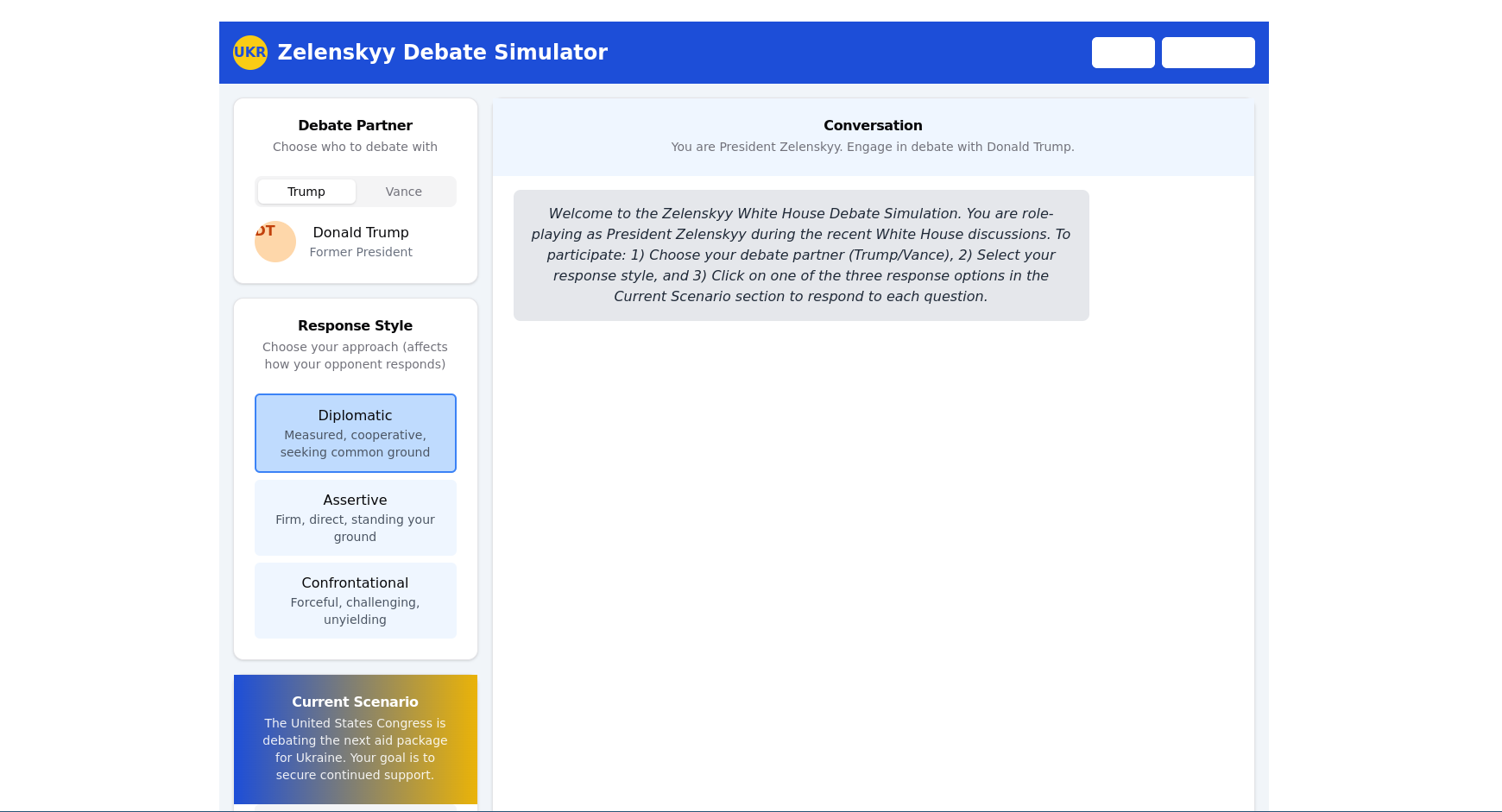

4. WTF Case: Trump vs. Zelensky Simulation 🎭

Prompt: MANUS 4 - DEVIN 1.5

“Create an interactive simulation where users can role-play as President Zelensky during recent political debates.”

My Take:

Honestly, this was the craziest demo of all, and Manus totally crushed it. Devin’s simulation was not good, on the other hand Manus produced something actually engaging and usable—almost. Manus really shined when creativity and interaction come into play.

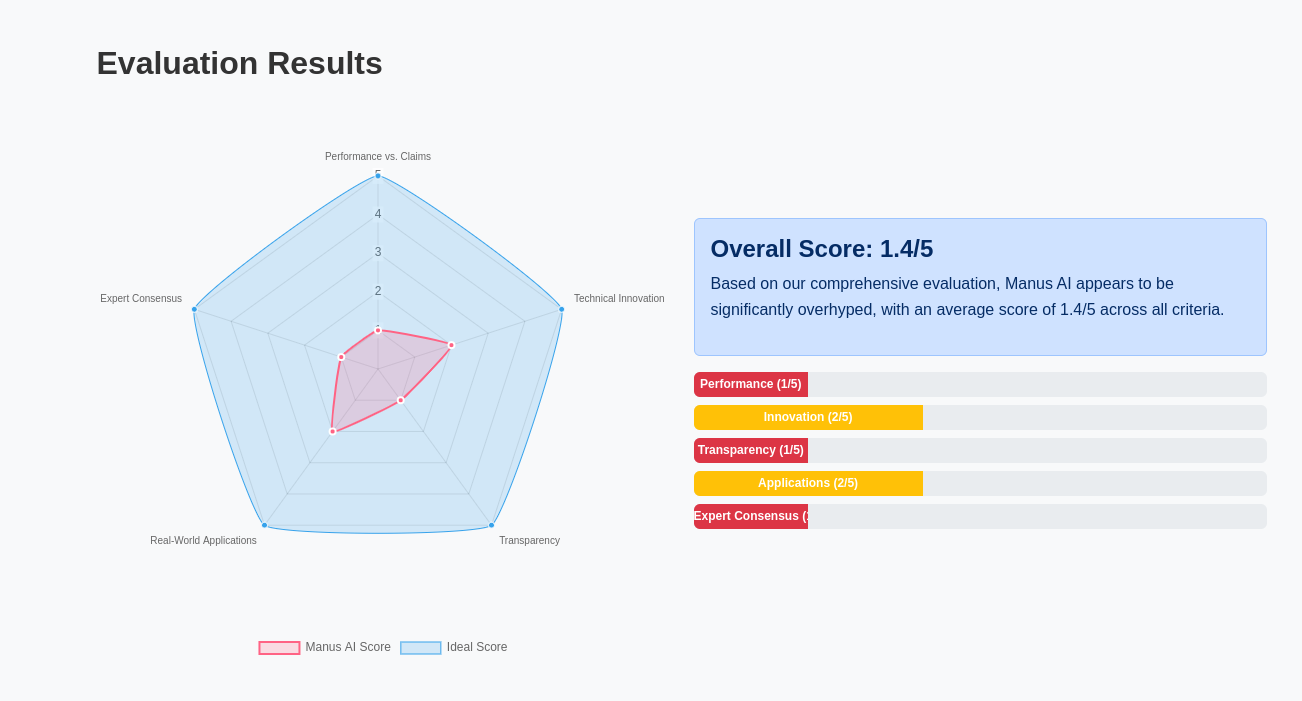

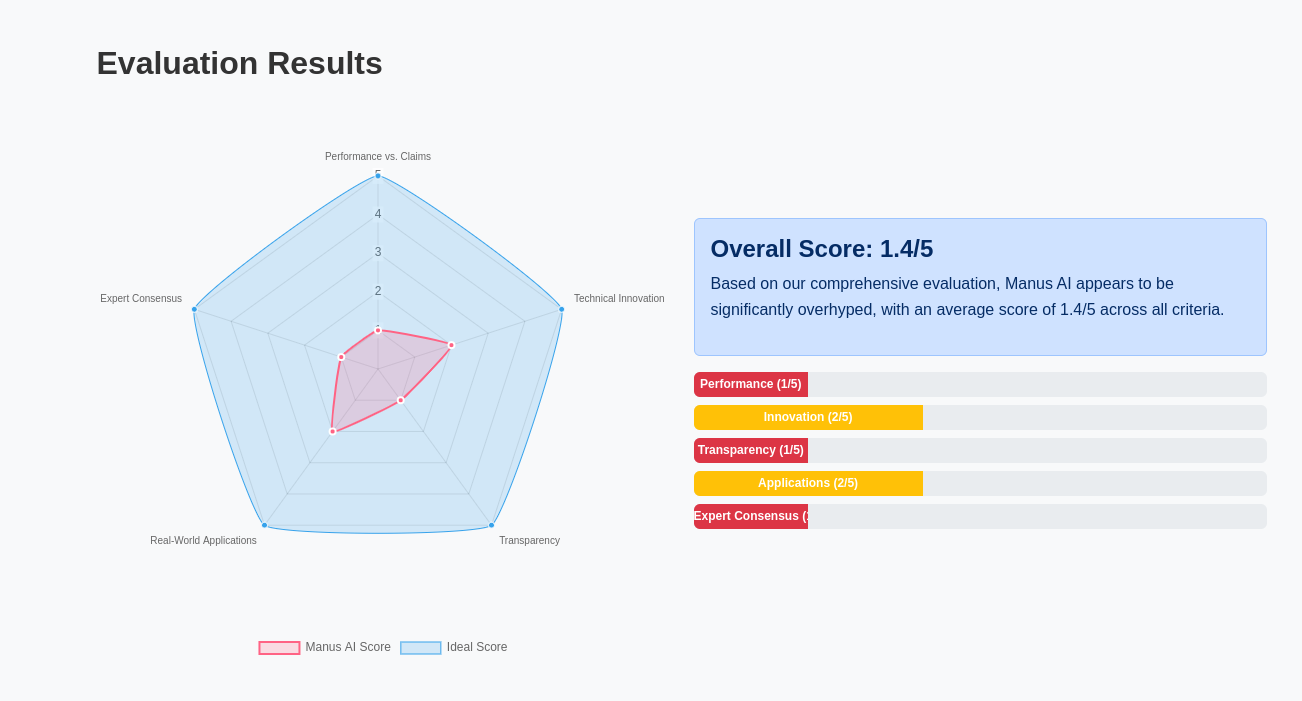

Bonus Fun: Devin’s “Overhype” Analysis 😅

Just for fun, I asked Devin to analyze if Manus AI is overhyped. Well, Devin got a little heated—check out the hilarious report. It’s is a must read 😂:

My Take: MANUS 4 - DEVIN 1.5 + 🦍

Looks like Devin has some strong opinions about Manus! 😂 It’s amusing to see these AI agents “interacting” this way. It makes testing them feel more personal and fun.

Final Thoughts: Who Wins?

Here’s my overall impression:

-

Devin’s workflow feels more structured and transparent. You see every step—planning, coding, testing. It’s designed for detailed software engineering tasks expecially for integrating into already existing repos for pull requests. But sometimes this detailed approach can negatively affects other stuff. And, I am not sure if it delivers what they promise. Check this Primagen video 🦍: https://www.youtube.com/watch?v=QOJSWrSF51o

-

Manus, meanwhile, feels broader, but consistently delivers more polished final results in general scenarios (like research, design, or even fun simulations).

Overall, Manus feels really good at least this is what i got from the shared demos, in these general-purpose demos—it really impressed me in areas where creativity, interactivity, and quick, usable results matter most. Devin remains a pick for detailed engineering and structured workflows (still needs improvements), but Manus looks clearly has the edge in broader, general use cases for my initial very limited exploration.

I had a ton of fun putting this comparison together, and I hope you found it helpful! If you have any comments, additional resources, or if you’ve tried these agents yourself, please share below. I’m planning to explore and compare even more AI agents soon, so let’s keep this conversation alive. We are also planning to share a new agent paper and benchmark stay tuned 🖖